‘A clear act of tokenism’

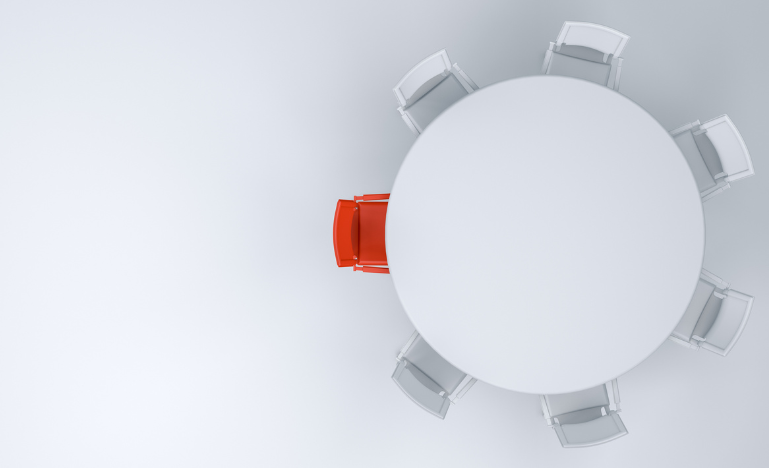

How do we tap the vast potential of artificial intelligence systems without exacerbating biases inherent in the data they train on? It starts with having the right people at the table.

Dr. Gideon Christian has been researching the legal impacts of artificial intelligence for years.

So, when the federal government launched its first AI Strategy Task Force in September, he was interested in seeing how it would gather expert advice on building safe systems and strengthening public trust in the technology.

The 27 initial task force members included renowned researchers, instructors, lawyers, technical experts and entrepreneurs in AI, implementation, data storage and governance.

Christian wasn’t surprised by who was on the list of members, but rather who wasn’t, as not one of them were Black.

“In setting up that task force, there should be proactive steps to ensure that individuals from diverse communities are represented, especially from the Black community, because research has shown that the Black community is one racial group that is most adversely impacted by artificial intelligence technology,” he says in an interview on the CBA’s Verdicts and Voices podcast.

“They deserve a clear voice, and not just a clear voice; it will require members of this community who have expertise.”

Christian teaches at the University of Calgary's School of Law, where he also holds the University Excellence Research Chair in AI and Law. Three weeks after seeing the list, his signature was one of 60 on an open letter to Prime Minister Mark Carney and Minister of Artificial Intelligence Evan Solomon, calling for the inclusion of Black Canadians with relevant expertise.

A 28th member was quietly added to the task force the same day the letter was sent. However, while the new member was Black, they were still a law student and not yet an expert in the field of AI.

“This is not really representation,” Christian says.

“This is a clear act of tokenism.”

Algorithmic racism, also known as AI bias, refers to the tendency of AI systems to produce outputs that are systematically skewed or unfair when applied to specific individuals or groups.

“We don't see some of these things as just technical error. Sometimes they are embedded in the broader structures of power, or historical discrimination, or just social hierarchies,” says Jake Effoduh, an assistant professor at Toronto Metropolitan University’s Lincoln Alexander School of Law and former chief counsel for the Africa-Canada AI and Data Innovation Consortium.

“This is often because maybe there are issues in the data, or there are issues in the modelling of the AI system. Maybe it's just an objective choice, maybe it wasn't designed for certain people, or it might be in terms of deployment context.”

He notes that most AI systems are trained on data, and all data is from the past, not futuristic.

“If the data we have on policing has historically targeted specific people or historically criminalized certain communities, the AI system would imbibe that particular data pathway.”

This bias results in differential treatment or outcomes for individuals. Examples of this include situations where certain individuals' skin tones are not recognized by some cameras, or when sanitizers don't work effectively for all types of hands, he says.

For instance, in an experiment where Effoduh asked people to hold phones, more women complained that the phone sizes did not fit their hands.

“Maybe they're not really designed by more women. We can replicate the same things when it comes to other embedded normative assumptions, or other ways that technology, embedded software and hardware, can reproduce existing stereotypes that we see in society.”

Within the criminal justice system, the use of AI in recidivism risk assessment, predictive policing and facial recognition has had serious consequences for people’s lives.

“We have seen cases where the use of this tool has resulted in forced arrest of individuals for crimes they did not commit because the AI tool matches their face to the face of the suspect,” Christian says.

“And in most cases, these individuals are usually Black people.”

The Black community is one of the most adversely impacted communities when it comes to facial recognition technology. He’s aware of at least eight cases in Canada where immigration authorities have sought to revoke the refugee status of successful refugee claimants because AI has matched their faces with someone else in the immigration database.

Beyond the criminal justice system, Christian's AI research has led him to examine biases in the health sector, mortgages, and human resources.

“In almost each and every one of these sectors that I have researched, there are significant cases of racial bias arising from the deployment of AI in this field,” he says.

“That is why I'm really concerned about the future of AI as it impacts Black communities.”

Effoduh says bias prediction models can help reduce or eliminate some of these biases, but they require the knowledge and expertise of individuals who understand these issues for prediction models to be effective.

“An AI system, just by its nature, even without the intent of the person developing it or designing it wanting to make it racist or discriminatory, it just seems to inherit and amplify the historical inequities that are embedded in the data and embedded in the design, and even in the deployment processes as well.”

If we want to take the legitimacy, equity, and democratic strength of AI governance seriously, he says a lot depends on who is at the table.

“I don't think this is about any symbolic inclusion.”

Instead, Effoduh says it's about how Black scholars can add value.

“When that value is not added, we know who bears the brunt.”

Listen to the full interview to hear what our guests think the AI Strategy Task Force should prioritize and how to better mitigate the risks of exacerbating systemic biases in AI.